Everyone has seen the dramatic images produced by the Hubble Telescope from the iconic Pillars of Creation to the hundreds of galaxies in one shot, but how are these images created?

The Hubble Telescope captures images in monochrome, just like the old black and white photographs with no color, but the images we see are stunning vibrant color pictures. How are these created and what elements determine the color mapping?

To understand the elements that create the images we need to understand what we see as an image. A typical image is created from a grid of pixels or dots. Each pixel represents a colour and intensity in the image. These are grouped together so tightly that the eye cannot see the individual pixel, but sees a smooth transition from one pixel to the next.

Basically a color image is created from three separate components: Red; Green; and Blue. From these prime colours any other color can be represented by mixing them in varying proportions. Each pixel of the image has 3 components Red, Green and Blue with a scale of 0 to 32,768 representing the intensity of the color for that pixel. In this case 0 represents no colour and 32,768 is full color. So a pixel having an RGB value of 0:0:0 is black and that having 32,768:32,768:32,768 will be white.

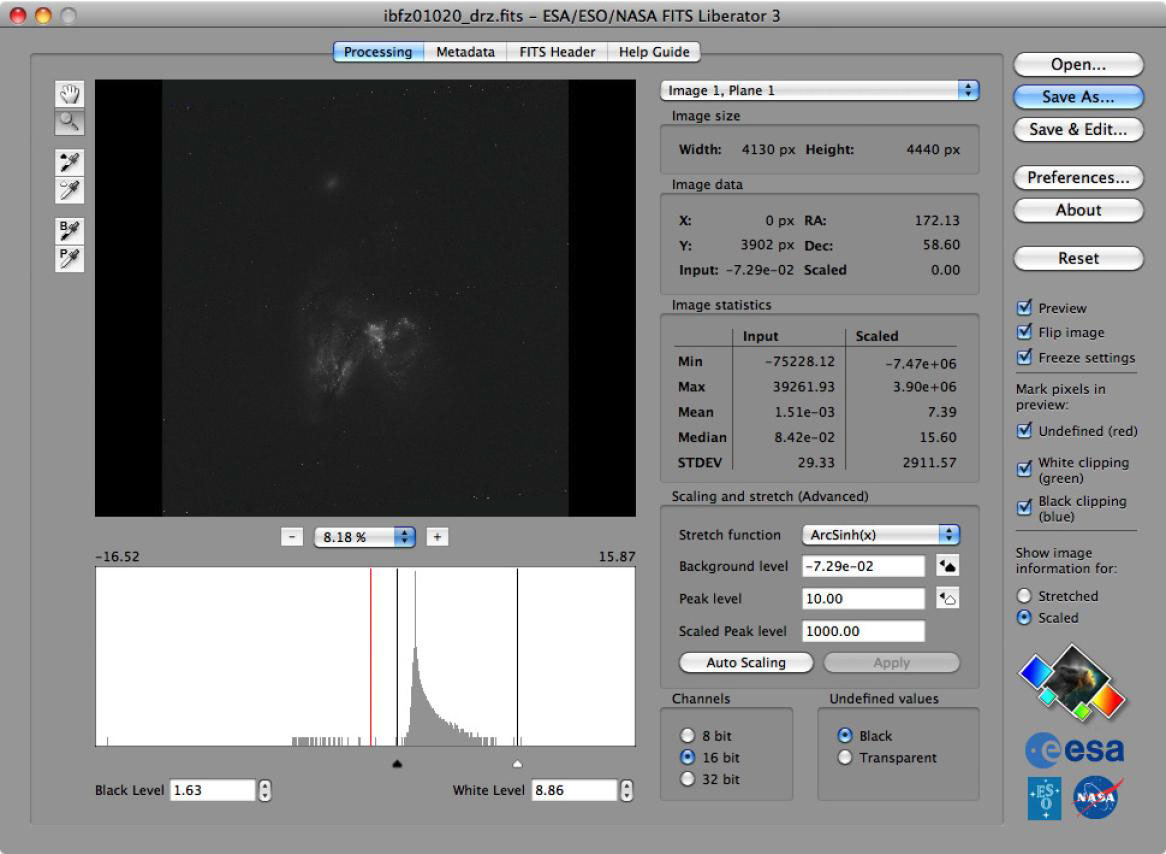

Knowing how an image is constructed allows us to start to understand how a Hubble image is put together. Hubble has a number of instruments on the telescope, but the one we shall examine in this article is what is known as the Wide Field Camera 3 or WFC3. The camera of WFC3 is capable of recording a much larger spectrum than the human eye can see. This ranges from ultraviolet through visible light to near-infrared. The WFC3 camera is a 16 mega-pixel monochrome camera which produces a greyscale image. If an unfiltered image is taken it will include all the spectrum from ultraviolet to near infrared with each pixel having an intensity value of 0 to 32,768 representing the sum of all the light entering the camera. As this is greyscale there is just a single value for each pixel representing the intensity of the data recorded.

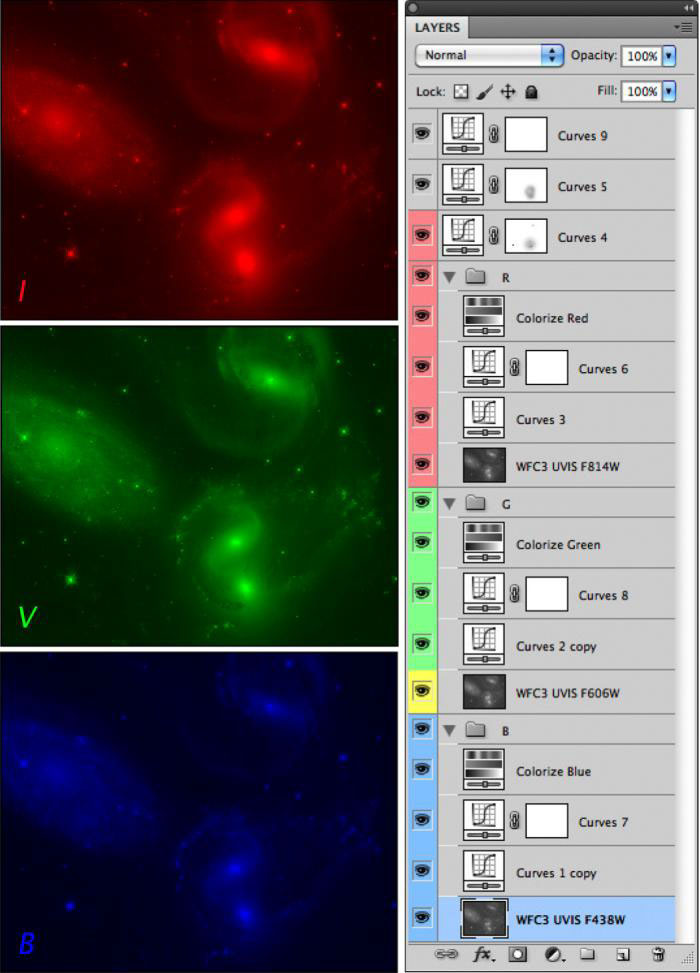

Capturing the entire spectrum is not particularly useful and the image needs to be restricted to certain wavelengths of light. To restrict the type of data recorded by the camera there are a series of filters that can be placed in front of the camera’s sensor to restrict the data recorded to particular wavelengths. To create a true colour image the camera must take 3 images: one only allowing red to pass through; one recording only the green light; and one the blue. These will all be monochrome images, but can be reconstructed into a normal color image.

Allowing a wide range of the spectrum to pass through a filter is known as Broadband filtering. A process that amateur astronomers use to eliminate the effects of light pollution is known as narrowband filtering. This allows astrophotography to be carried out even from city centres where the effects of the sodium lighting are filtered out allowing other light to pass through.

This is a fundamental principle for narrowband imaging and is based around spectrometry. Spectrometry is the ability to determine the composition of certain elements based on the light that is produced. This is of particular use to astronomy as it allows the study of particular gasses in the universe. Filters have been developed to isolate the more useful elements and can be used for imaging. In particular most emission nebula mainly consist of hydrogen and a hydrogen alpha can be used to restrict only light of the 656 nm wavelength to pass through.

This principle of only letting certain wavelengths of the spectrum to reach the camera also applies to non-visible light such as ultraviolet at the blue end of the spectrum and infrared at the red end. The camera is able to see and record these wavelengths as differing intensities in monochrome. This means that we now have a visual representation of data in the non-visible parts of the spectrum. Ultraviolet is particularly important as this is the hot areas where new stars are being created and represents new light. Infrared at the other end of the scale represents much older light and is associated with dying stars. Being able to study this data in addition to the visible light is important to gain a better understanding of what is happening in the universe.

This then raises an issue of how to represent the light that would normally be invisible to the human eye. This was solved by the creation of the “Hubble Palette” where the invisible light is mapped to a visible prime color i.e. the ultraiolet is the blue component, the infrared the red component etc. This has been extended to amateur astronomers who map narrowband images to color components thereby creating the false color Hubble Palette images.

With the theory out of the way we now need to get some good clean images with minimal noise and good light data. The light that is being collected is often very faint and almost indistinguishable from the dark background. So a method of capturing images and increasing the light data whilst minimising the noise is employed.

The capturing the light images is very similar to the way astrophotographers capture images on the ground. The telescope takes a number of images then stacks them together. One of the reasons this has to be done on the ground is to remove artefacts such as plane trails and satellite trails. Up on orbit the Hubble telescope is flying higher than any plane so why would this stacking need to take place? Well there may not be planes to contend with, but there are still satellites flying in higher orbits and also cosmic rays which will be captured by the camera.

Stacking images has two effects. Firstly it removes all cosmic rays, satellite trails and other transient, unwanted data. This is done in a software application which effectively looks at two images and compares one to another. If a pixel is set in one and not in the other then it is likely to be an artefact that needs to be removed. Secondly the more images that are combined together the more the data signal is enhanced whilst reducing the random background noise. The result of this process is an image that is clean, of good light quality and lower noise. This process has to be repeated for each different wavelength (filter) that will be incorporated into the final image.

The stacked images are then ready for processing into the final images. This is done by ensuring that all the components are of the same size and orientation with all the stars lining up as the images are layered on top of each other. This must be done prior to processing the images as they must all be in perfect alignment for the color components to be able to be merged into the final image.

Once aligned the images are imported into a graphics processing package such as Adobe Photoshop. They are assigned layers within a single image. You can think of this process as placing three different transparent images on tracing paper and then shining a light behind it to project the combined image. This now is when each different layer is associated with a color enabling the combined image to be rendered as a full color image. The image is then modified with various transition tools to lighten and increase contrast both to the individual layers and the image overall. This will ultimately produce the final image.

If the layers that were combined were taken with red, green, and blue filters then the final image will be a true lifelike color image. If on the other hand the layers represent narrowband image data then the color mapping will produce a false color image. This is where the famous Hubble Palette is derived from. The Hubble Palette normally has the Hydrogen Alpha data mapped as green, the Sulphur II data mapped as red and the Oxygen III data assigned to blue. This color mapping produces the dramatic false color images that we are all used to seeing from the Hubble Telescope.

This is a rather simplistic explanation of the process, and there are many other steps that are allied to the data to produce the final images. The main interesting thought though is that the processing of data from the Hubble Telescope is very similar to that that amateur astronomers use from Earth based telescopes. NASA make the data from the Hubble Telescope available to the public and it is possible to create your own Hubble images by combining and processing the data. This will be the topic of a future article.